On the 24th September I had the opportunity to talk at the Cloud Security Alliance Symposium, a free event in support of the Cloud Security Alliance EMEA Congress 2013 hosted in Edinburgh on Cloud Security. My talk focused on real life examples of cloud security issues and internal research that we at 7 Elements had been working on. Our earlier paper on cloud security issues can be found here. This blog post covers some of the themes discussed during my talk.

Cloud Basics

What is the Cloud? Well, in short, it is a great marketing gimmick. There is no one such individual thing as the ‘Cloud’. The Cloud is a term used to describe multiple service offerings such as Software as a Service (SaaS), Platform as a Service (PaaS) as well as Infrastructure as a Service (IaaS). All of this is characterised by the use of on-demand provision, rapid ability to scale and are based on payment solely for the amount of resource required at any given point.

Key Risks

What are the key risks presented by using the Cloud? For me, the key risks and some of the issues that an organisation should explore when looking at the Cloud break down as follows:

Legal Jurisdiction

As an organisation you should be aware of how legal requirements to disclose data may be affected by the geography of where the data is stored. If you are based in the UK and use a US based Cloud provider, consider the impact on your organisation if the US courts enforce disclosure of your sensitive data. Where the Cloud is used to store or process sensitive personal data, there may be an impact on your compliance with the required regulation (Data Protection Act,) which you will need to fully understand and mitigate.

Geographical Location

Different geographical locations mean different legal jurisdictions, which will have an impact on your legal and regulatory requirements within each of those regions. This may restrict the type of data that can be stored or processed or limit how the data in question can be transferred between locations. The ability to encrypt data will also be impacted within certain locations due to export restrictions.

Access to Data

Many Cloud services are based on the use of shared services or multi-tenancy solutions. The benefit to the end user is reduced costs, but this can also lead to security concerns. The data may be at risk of attack from another user of the same Cloud service due to the architecture in use. Consideration should be given to how the Cloud provider has limited the possibility of data compromise.

Data Destruction

With the Cloud, you can grow and shrink your resource requirement. When the data on disks is no longer needed then it will need to be destroyed. You will need to gain assurance that this has been destroyed in compliance with your organisation’s standards, that the next user of that environment will not accidentally gain access to your data, and that you have met any regulatory requirements.

Data Availability

The Cloud sells itself as always being there. The data is ‘in the Cloud’, so you will always have access to it. However, the Cloud brings its own impact in relation to your organisational business continuity plans and disaster recovery approach. Consideration should be given to scenarios where the Cloud provider fails, or your ability to connect to the Internet fails. This may render the data unavailable.

Economic Denial of Service

What controls do you have in place to protect against unauthorised provisioning of cloud instances? Based upon a simple example of an attacker gaining access to an organisations provisioning capability (a real life example of gaining access is included later in the blog), we have estimated that an attacker could cause an individual organisation £14,000 of costs in a single day. More on this will follow in a separate blog on Economic Denial of Service.

Cloud Security

The talk then moved on to real life examples of cloud based security issues.

Geographical Location

The first focused on the geographical location for data. Did you know that internally created or ‘private’ cloud installations can be configured to automatically connect to the public cloud if capacity is reached?

The following example shows a private cloud, configured to do just this:

Server instantiated on Eucalyptus.

Number of instances running: 1

===============================================

=================================================================

Auto-scaling successful

instantiated eb server: instanceID i-35AE00C1

Number of servers: 2

=================================================================

=================================================================

Cloud bursting successful

Instantiated web server on EC2: instanceID i-32CB323A

Number of servers: 3

=================================================================

=================================================================

The issue here is that data is now outside of the organisation’s boundary and is stored on Amazon EC2. Given this scenario, there would be no prior warning and no assessment of the data that is now in the public cloud. This could lead to potential information disclosure or breach data handling requirements.

Access to Data

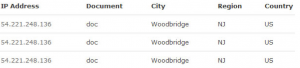

We then looked at the issue of who has access to your data. A recent article outlined how Dropbox were accessing uploaded word documents. The researcher discovered his documents were being opened by Dropbox-owned Amazon EC2 instances automatically 10 minutes after they had been uploaded, although other file types were not being accessed. The following screenshot shows the EC2 IP addresses accessing the documents:

More on this issue can be found in our previous blog post here.

At the end of the talk, I then provided a live demo of how easy it was to identify valid Amazon EC2 and S3 access and secret key values and use these to enumerate running cloud instances.

$ ruby enumerate.rb

Enumerating AWS account AKWWR5MSIHCI7FH3HIAA

EC2 Instances

——————————————————————————-

[*] i-828b26e4 / running / 54.224.143.100

S3 Buckets

——————————————————————————-

oa-site-backups

– /home/data/_backups/20130902.database.sql.tgz

– /home/data/_backups/20130901.database.sql.tgz

An individual who has access to these credentials could choose to start new cloud instances (potentially leading to an economic denial of service), stop current services leading to a more common denial of service or more importantly, access the data currently stored within that instance. This issue will be covered in more detail in our next Cloud Security blog.

Conclusion

As we have seen in many ways, the Cloud is no different to the wider challenges of managing an organisation’s data securely. However, with these unique opportunities, unique risks will also arise. As such, we need to understand those risks and assess the data that we wish to put into the Cloud and understand how important that data is in terms of confidentiality, availability and integrity to the business.